Let’s configure pyspark in PyCharm in Ubuntu.

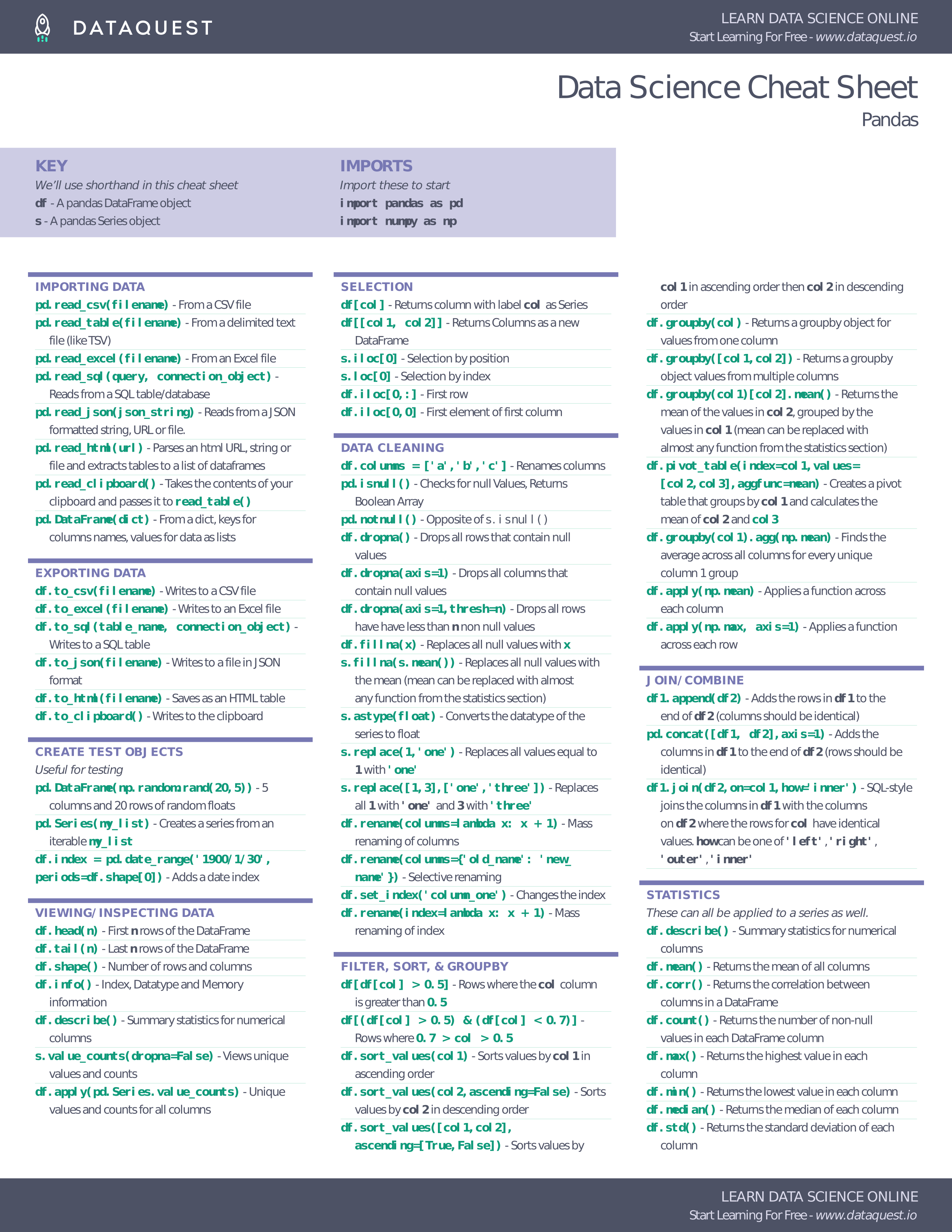

When using the spark to read data from the SQL database and then do the other pipeline processing on it, it’s recommended to partition the data according to the natural segments in the data, or at least on an integer column, so that spark can fire multiple sql queries to read data from SQL server and operate on it separately, the results are going to the spark partition. PySpark SQL Cheat Sheet Thanks to Data Camp for this awesome PySpark SQL cheat sheet. More Data Science Cheat Sheets; Comments. First Name or Initials Your Comment. # ' A collections of builtin functions ' import sys import functools import warnings from pyspark import since, SparkContext from pyspark.rdd import PythonEvalType from pyspark.sql.column import Column, tojavacolumn, toseq, createcolumnfromliteral from pyspark.sql.dataframe import DataFrame from pyspark.sql.types import StringType.

Pyspark Sql Cheat Sheet Excel

First, download spark from the source. http://spark.apache.org/downloads.html

There is a simple two step process for the configuration.

First, setup spark home, SPARK_HOME, in the ‘etc/environment’

SPARK_HOME=location-to-downloaded-spark-folder

Spark Sql Cheat Sheet Scala

How to download quicktime player for mac. Here, in my case, the location of downloaded spark is /home/pujan/Softwares/spark-2.0.0-bin-hadoop2.7

Pika lagomorph. And, do remember to restart your system to reload the environment variables.

Pyspark Sql Cheat Sheet Template

Second, in the pycharm IDE, in the project in which you want to configure pyspark, open Settings, File -> Settings.

Then, in the project section, click on “Project Structure”.

We need to add two files, one py4j-0.10.1-src.zip, another pyspark.zip, in the ‘Content Root’ of ‘Project Structure’

Pyspark Sql Cheat Sheet Free

Pyspark Sql Cheat Sheet

In my case, the project’s name is Katyayani, so, in the menu, Settings -> Project: Katyayani -> Project Structure . On the right side, click on ‘Add Content Root’ and add ‘py4j-0.10.1-src.zip’ [/home/pujan/Softwares/spark-2.0.0-bin-hadoop2.7/python/lib/py4j-0.10.1-src.zip] and ‘pyspark.zip'[/home/pujan/Softwares/spark-2.0.0-bin-hadoop2.7/python/lib/pyspark.zip]

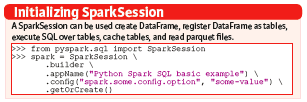

After this configuration, lets test our configuration that we can access spark from pyspark. For this, write a python script in pycharm. The following screenshot shows a very simple python script and the log message of successful interaction with spark.

Pyspark Sql Cheat Sheet Pdf

And, this concludes our successful configuration of pyspark in pycharm.